Research, design, test, iterate

Design is a process for solving problems. I like to get paid by helping people solve theirs. Invariably, they're ones that I never even knew existed!

1. Research

Helping to solve other people's problems first starts with understanding them. Research is the step that allows us to do this. Though I'm not a specialist researcher, I absolutely believe in its value as part of any good design process.

"Poor user experiences inevitably come from poorly informed design teams."

Where possible I like to work with dedicated researchers and have found their specialist training invaluable on larger, more complex projects or those where the subject matter is particularly sensitive.

When this isn't possible, I look to organise and conduct a simple research plan, including:

- Kick off meeting

- Customer and stakeholder interviews

- Data analysis

- Competitor review

Kick off meeting

The kick off meeting is the opportunity to get to know all the project stakeholders and discuss their project goals. It allows us to ensure those goals are commonly shared and also gather any initial insights and ideas the team may have. As research progresses these ideas can then be put to the test to make sure they're valid.

Customer and stakeholder interviews

Of all the many tools, techniques and research approaches available, I feel one to one interviews are probably the most effective way of really understanding the thoughts and motivations of people.

I've conducted interviews with both customers and project stakeholders in order to draw out useful insights and identify any recurring themes captured within these discussions.

Customer interviews help to understand how people use the product or service in question and their motivations for doing so. With project stakeholders you can probe how they do their jobs, their workflows, processes and problems they face. The insights gained from both of these allows the development of a solution that not only meets the requirements of those that will use it, but one that is also maintainable.

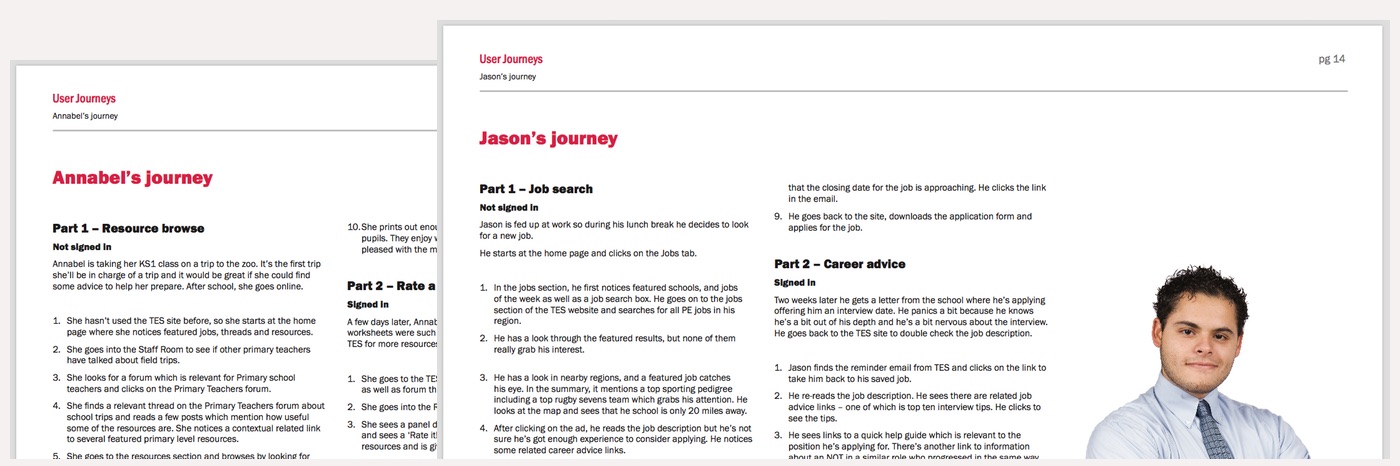

These findings are usually captured in a short report or presentation and transformed into personas that are used later in the design process to help guide design decisions.

Data analysis

Though qualitative research helps to understand how people make decisions, to me there's nothing like hard data to truly understand what people actually do. I like to study the numbers to identify any specific pain-points within the customer experience and see whether they support the insights.

Competitor review

Competitor reviews are a useful tool for gaining an insight in to how the competition is meeting the needs of the market and identifying opportunities to offer a better service. I try not to use them for looking too closely at specific design solutions though, as it can be misleading. Without being privy to the original design decisions and their measures of success, there's little way of telling whether their approach will work for you.

2. Design

With these research inputs, we're able to move in to the design phase with a clearer understanding of the problem.

As much as possible this stage should be one of continual creation, testing and evaluation of concepts. That way you know whether you're on the right track and if not, get yourself back on it sooner.

I usually like to approach it in the following way:

- Evaluate constraints

- Content strategy

- Task analysis

- Information design

Evaluate constraints

No idea, however brilliant, is any good if it can't be delivered. Very early on I like to understand where the key challenges are likely to be. Invariably all design solutions have an impact on the business in some way (staffing, workflow, infrastructure, running costs) and it's good to ensure aspirations are kept in line with any hard constraints.

Content strategy

A product or service exists either to provide people with information or enable them to complete a specific task.

If a solution is likely to be particularly content rich, I like to use stakeholder workshops to work through the details: what exists today, who it's for and in what circumstances, what its structure looks like, and how it will be created and maintained.

The outcomes of these are generally captured in deliverables such as:

- Content audits and inventories

- Content creation and maintenance guidelines

- Content templates

- Workflow recommendations

Task analysis

With more task orientated products and services it's important to understand the context in which people will interact with them.

I generally create context scenarios, process flows or write use cases as the first step in beginning to define how an interface will support a user to accomplishing their goals; detailing ideal user interactions.

From here I begin to explore less common scenarios, or edge cases. In fact, I prefer Eric Meyer and Sara Wachter-Boettcher's description of them as 'stress cases':

"…stress cases: the moments that put our design and content choices to the test of real life."

In my mind, this is where good products shine through and where most of the design work really happens. By their very nature they are far more likely to occur at times of anxiety or stress and therefore stand to demonstrate the true regard you have for your customers.

"Edge cases define the boundaries of who [and] what you care about."

From these context scenarios and process flows functional, data and contextual requirements can then be extracted. I generally capture these as textual descriptions in a requirements spreadsheet or as user stories.

Information design

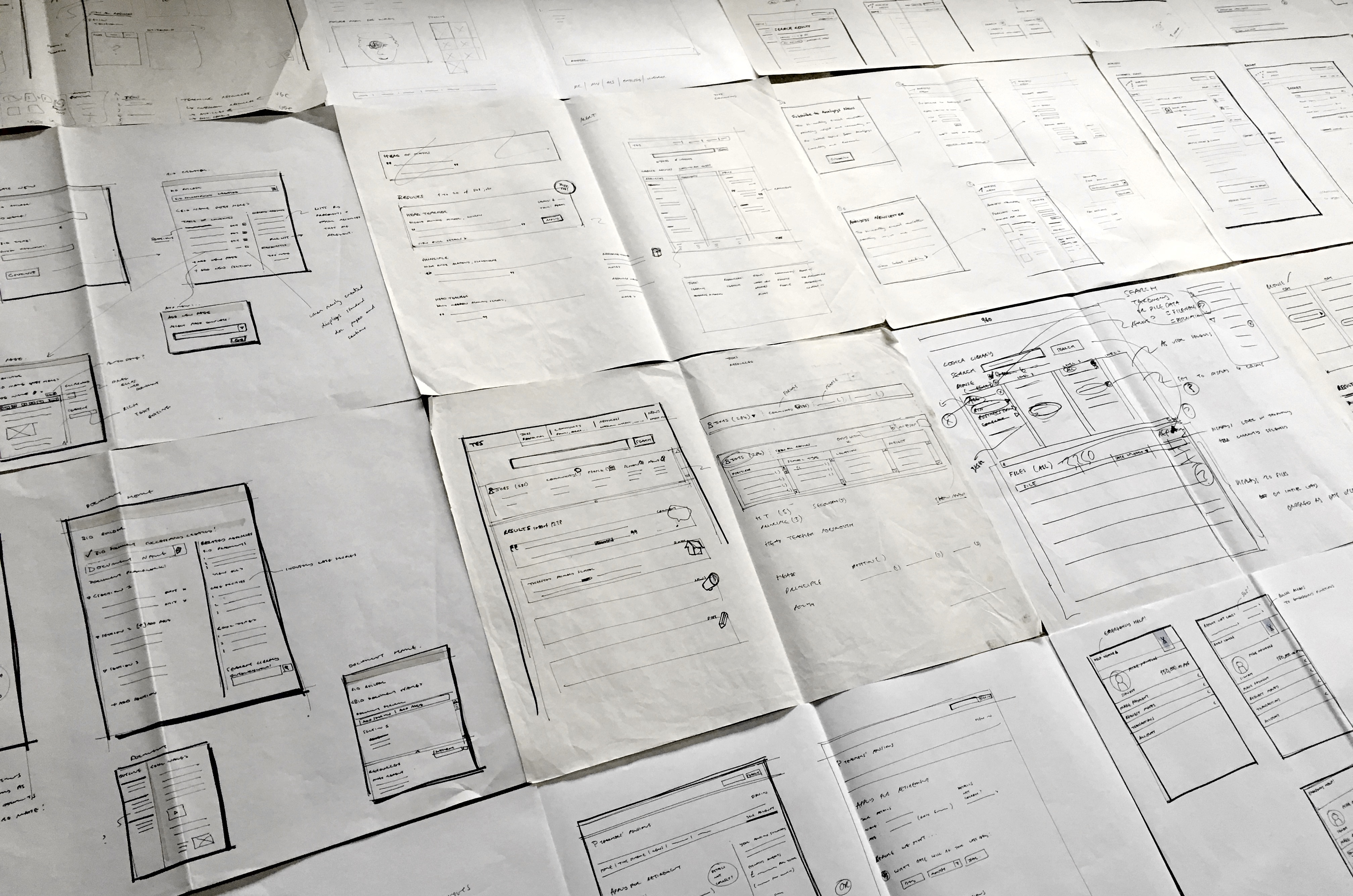

The next step is to start defining the key design framework. Building on the context scenarios, process flows or content templates, I usually begin by creating storyboards — a series of low-fidelity sketches that outline the interface structure. This is also the time I begin to look at the overall information architecture and navigation structure.

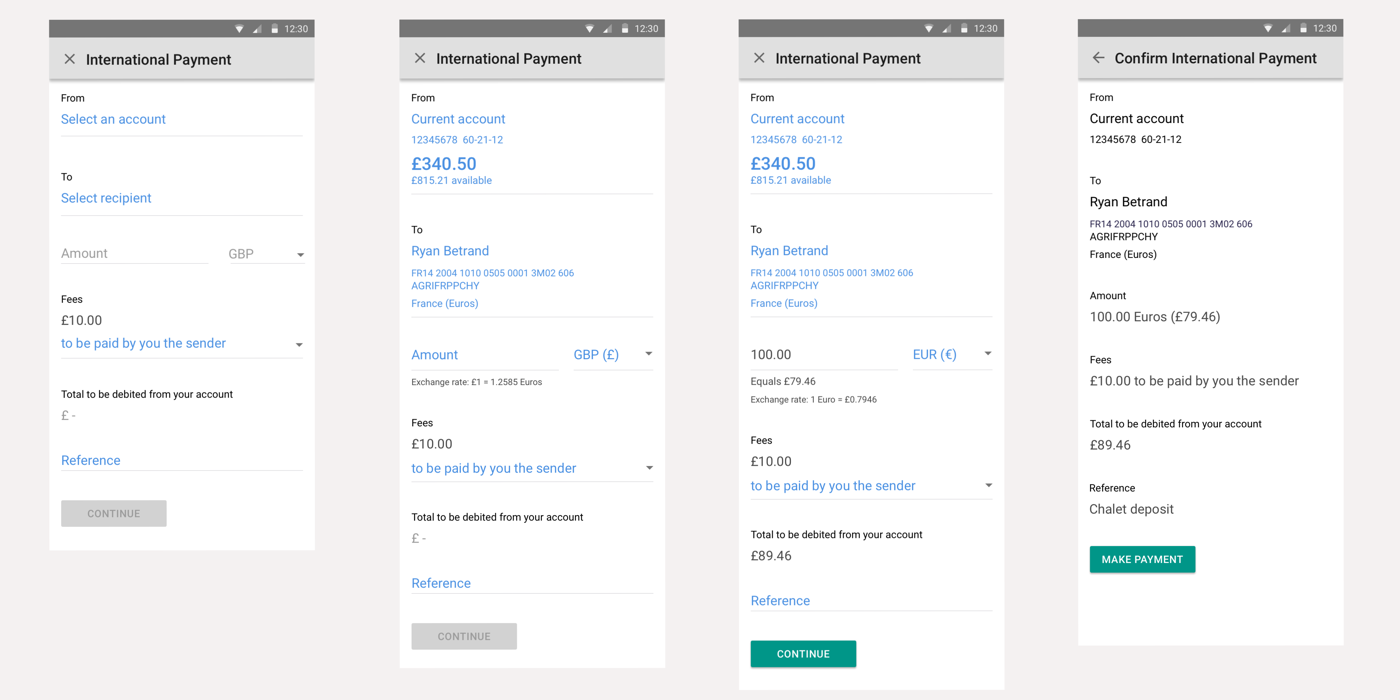

From here I begin to focus on sketching the details of individual screens before taking them on to the next stage of fidelity as wireframes and interactive prototypes.

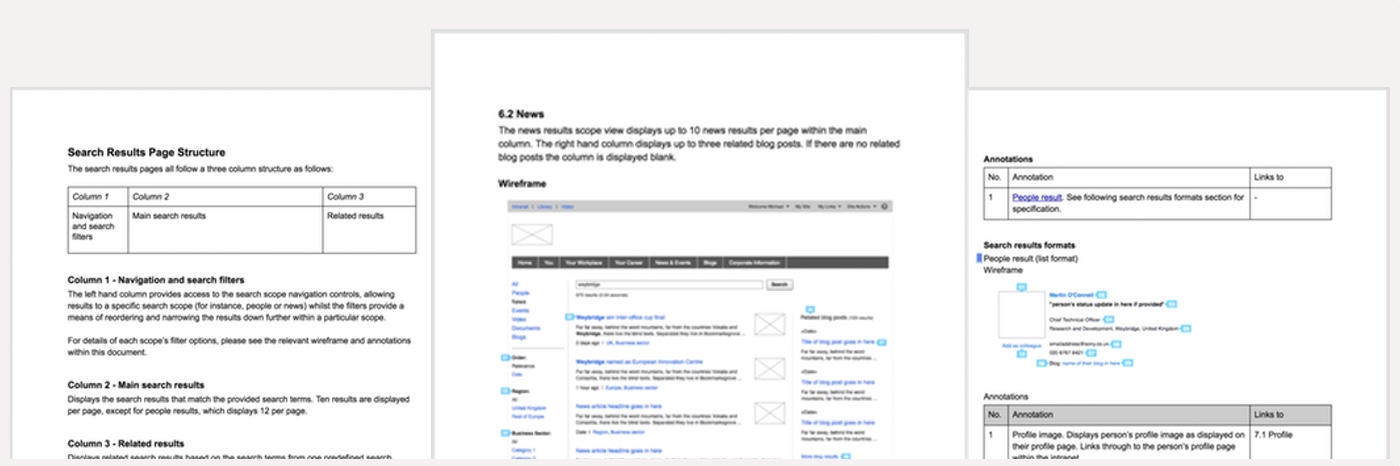

This then becomes a process of continuous refinement as concepts are tested, validated and revisions made. The final outputs of this phase are then generally captured in a user interface specification.

More recently I've been looking into approaches (such as Brad Frost's atomic design) that allow you to get in to 'code' quicker. My feeling is the earlier you can see concepts in the format they'll end up, the better, more informed design decisions you can make. I also believe it naturally encourages closer collaboration between design and development, leading to a better informed team and consequently a better product.

3. Test

Though last on the list, by no means do I recommend leaving it until the end! Ideally I like to test throughout the design phase, keeping it as simple and informal as possible in order to quickly validate and iterate concepts and ideas.

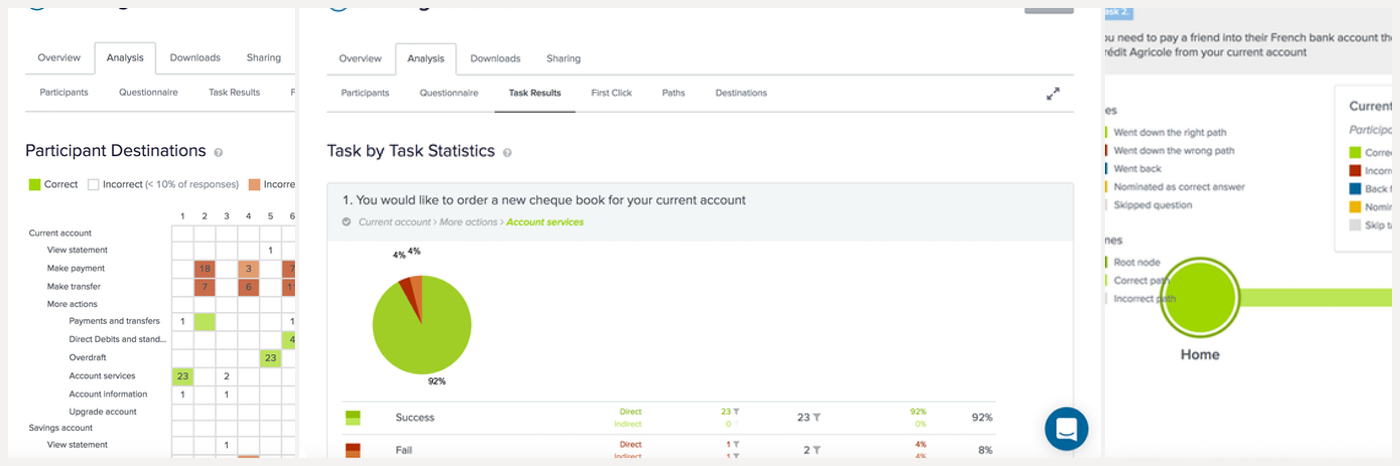

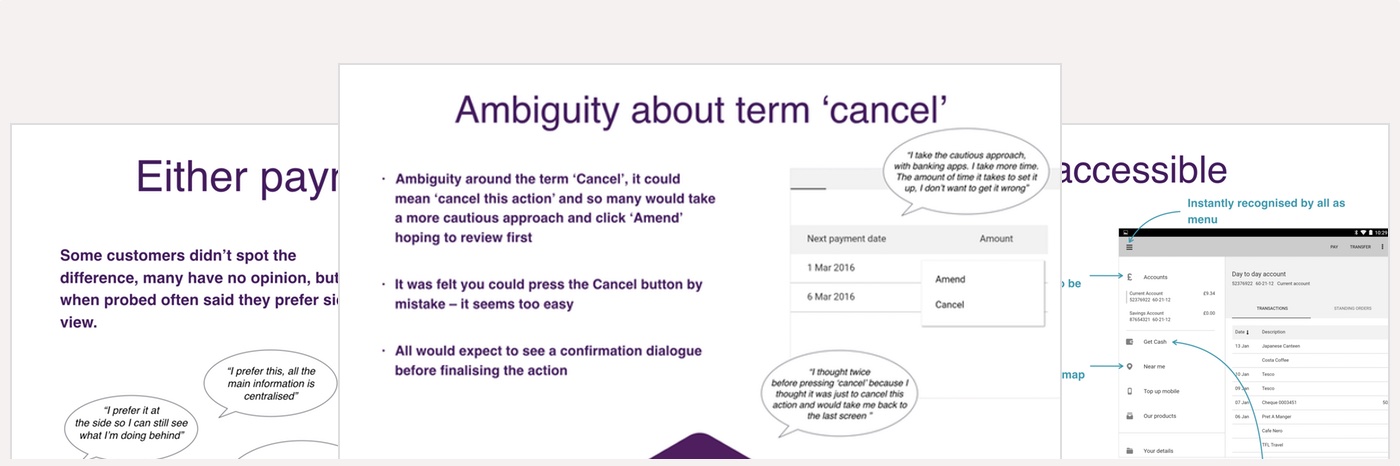

This can range from testing and validating information architectures with the use of card sorting or Treejack tests, checking the use of language and comprehension of content, through to usability testing of an interface using sketches, wireframes or interactive prototypes.

When possible I also like to get the wider project team to see customers using the product or service first-hand. Generally, I organise usability testing sessions in the lab and have a third-party moderate them. Not only does this ensure I don't inadvertently bias the findings (it's always difficult to stay perfectly neutral when it's your work under scrutiny), but it also allows me to discuss with the team what we see as it happens.

Testing should not be a one-off event and the opportunity to conduct it should never be confined to just the design phase. Even once a project has moved through development onto release, capturing how well it is performing allows plans for its future improvement to be made.